This post was developed as part of an engagement project for my Education Doctorate coursework. It explores how Christian values can inform ethical practices in computer science education, connecting ISTE Standard 4.7 with service learning, civic engagement, and professional responsibility.

I prioritize biblical principles, ethical frameworks, and practical teaching strategies in my teaching. Here, I consider how faith and pedagogy can come together to prepare students as both skilled technologists and ethical leaders.

Values and Ethical Issues

As a Christian Computer Science educator, my values and ethical principles related to ISTE Standard 4.7 reflect the intersection of my faith and my vocation. My mission seeks to engage students to excel in technical knowledge and to approach their digital lives by modelling Christian values in their work and interactions.

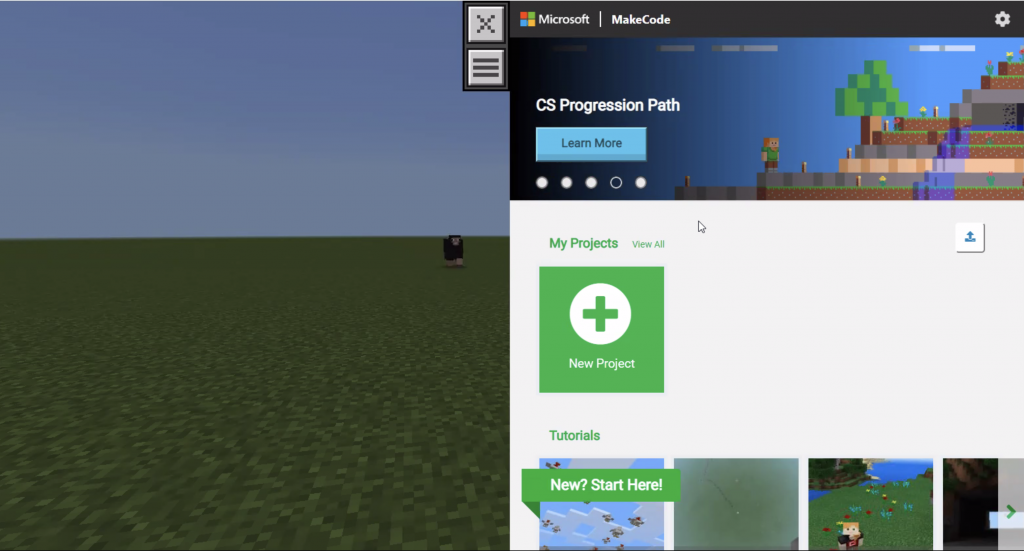

I envision my students harnessing the power of computer science to promote the common good, driving positive societal change through collaborative service-learning experiences and the responsible exploration of cutting-edge technologies like AI. By embedding ethical principles and a heart for service into their digital lives, they will lead with integrity, champion justice, and steward technology as a force for human flourishing.

Civic responsibility and Christian responses

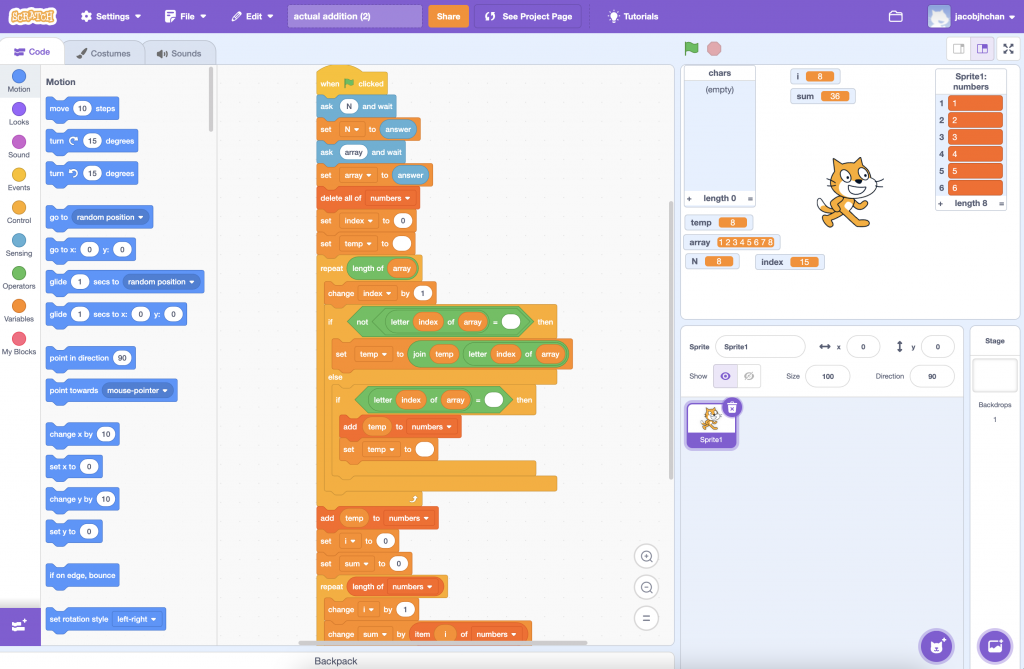

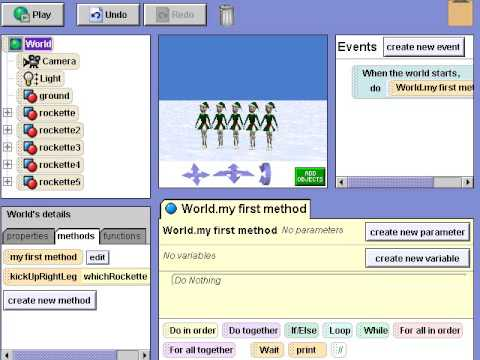

I encourage students to apply their technical knowledge for the betterment of society, serving the community and improving technological literacy while satisfying course outcomes. Integrating service learning into the curriculum provides a unique opportunity for students to engage in meaningful, community-centred projects. Fujiko Robledo Yamamoto et al. (2023) conducted a systematic review of service-learning in computer and information science, identifying benefits, challenges, and best practices, highlighting how service learning strengthens students’ practical skills and community awareness, but also emphasizes the need for greater benefits for all stakeholders, especially non-profit community partners. Such experiences encourage students to reflect on their values and consider the ethical implications of their work, ensuring they approach their careers with integrity and compassion.

Self-reflection is a cornerstone of effective collaboration, particularly in software engineering, where the success of a project often hinges on the team’s ability to communicate and adapt to client needs, as noted by Groeneveld et al. (2019). Drawing on these principles, I encourage students to develop socially responsible practices, recognizing the impact their work can have on their digital and physical communities.

This aligns with Matthew 22 from the English Standard Version (ESV) of the Bible: “And he said to him, ‘You shall love the Lord your God with all your heart, with all your soul, and with all your mind. This is the great and first commandment. And a second is like it: You shall love your neighbour as yourself.’”

This principle also supports ISTE Standard 4.7b, Inspire and encourage educators and students to use technology for civic engagement and to address challenges to improve their communities. Students are guided to develop socially responsible practices, respecting their digital and physical communities.

Integrating Christian Values in Computer Science Education:

Christian values can be a strong foundation in computer science education by fostering ethical decision-making among students. I emphasize discernment, integrity, and humility, modelling to students how to carefully consider the moral implications of their work to be skilled technologists and ethical leaders. I teach frameworks like the ACM Code of Ethics and the IEEE Global Initiative on Ethics of AI, which provide valuable guidance for navigating complex moral dilemmas (Anderson, 2018).

An important aspect of this instruction is teaching students methods and tools for discernment. One effective framework is deontology ethics, specifically pertaining to divine command theory and natural law. I also guide students through scenario-based exercises, where they evaluate potential outcomes of their technical work against ethical principles. For example, law enforcement’s use of historical crime data, social media activity, and demographic information to forecast potential criminal activity provides students with a critical mind and comprehensive approach to ethics that balances their technical knowledge with moral clarity.

I believe it is crucial to model ethical decision-making in my teaching and invite students to emulate this approach professionally. This aligns with the Apostle Paul’s message to the Corinthian church: “Be imitators of me, as I am of Christ” (1 Corinthians 11:1, ESV, 2001). Such practices encourage them to reflect on how their work impacts others and how their faith informs their responsibilities as computer scientists.

This principle supports ISTE Standard 4.7a by enhancing communities. Students are encouraged to exhibit ethical behavior and lead with integrity, which prepares them to become ethical leaders in the tech industry.

AI / Machine Learning and Theological Perspectives:

Advancements in artificial intelligence offer tremendous opportunities for innovation but also demand critical examination through the lens of Christian theology. Biblical teachings on human dignity, stewardship, and the risks of over-reliance on technology provide a valuable framework for assessing the societal implications of AI. In my teaching, I encourage students to reflect on the intersections between their work in AI and inclusive basic Christian values – as introduced by Horace Mann – emphasizing the ethical responsibility to steward technology wisely and compassionately. Discussions often center on the potential benefits and dangers of AI, particularly its impact on society and individual well-being, as well as the role of computer scientists as ethical leaders.

Oversby and Darr (2024) suggest that a materialistic worldview leads many AI researchers and enthusiasts to envision Artificial General Intelligence (AGI) with autonomous goals, potentially posing risks to humanity. This contrasts with the classical Christian worldview, which upholds the uniqueness of human intelligence as possessing a soul that is not reducible to algorithms. Schuurman (2015, p. 20) notes that regardless of AI advancements, human beings’ distinctive nature, made in God’s image, with free will and consciousness, should remain unquestioned.

This principle ties to ISTE Standard 4.7c, which encourages educators to critically examine online media sources and identify underlying assumptions. Students are invited to balance innovative problem-solving with discernment, aiming to act as responsible stewards of both technology and humanity.

Team dynamics in development projects

Creating a healthy team environment in development projects requires intentional effort to foster collaboration, effective communication, and ethical leadership. Biblical principles such as servant leadership (Philippians 2:5-8), teamwork (Ecclesiastes 4:9-12), and conflict resolution (Matthew 18:15-17) offer guidelines for developing these qualities. 1 Corinthians 12 provides a powerful metaphor for the church as a body, emphasizing the value of each member’s unique contributions and the importance of working together harmoniously. Drawing from this, students are encouraged to lead with humility, collaborate with integrity, and approach conflicts as opportunities for growth and mutual understanding.

Diaz-Sprague and Sprague (2024) identify significant gaps in ethical training and teamwork skills across technology disciplines, particularly in computer science and engineering. They note the inconsistent application of key teamwork principles and suggest structured exercises focusing on communication and cooperation. These exercises, which have garnered positive feedback from students, highlight the importance of intentional training in these areas to prepare computer science students for real-world workplace challenges. Incorporating such activities into the curriculum allows students to practice these skills in a controlled setting, adopting a culture of respect, inclusion, and collaboration that translates into their professional environments.

In my software engineering courses, students engage in role-playing scenarios to address team conflicts, reflecting on how conflict resolution principles can transform challenges into opportunities for improving relationships and productivity. They also participate in team retrospectives, where they assess their group dynamics, communication, and decision-making processes, identifying areas for improvement. These practices align with the principles of servant leadership, encouraging students to prioritize the success and well-being of their team members while contributing their best efforts to shared goals.

This approach aligns with ISTE Standard 4.7b, which emphasizes fostering a culture of respectful interactions, particularly in online and digital collaborations. Grounding teamwork practices in biblical principles and integrating structured exercises that build essential skills allow students to learn to navigate the complexities of team dynamics with grace and professionalism.

References

Fujiko Robledo Yamamoto, Barker, L., & Voida, A. (2023). CISing Up Service Learning: A Systematic Review of Service Learning Experiences in Computer and Information Science. ACM Transactions on Computing Education. https://doi.org/10.1145/3610776

The Holy Bible ESV: English Standard Version. (2001). Crossway Bibles.

Oversby, K. N., & Darr, T. P. (2024). Large language models and worldview – An opportunity for Christian computer scientists. Christian Engineering Conference. https://digitalcommons.cedarville.edu/christian_engineering_conference/2024/proceedings/4

Schuurman, D. C. (2015). Shaping a Digital World : Faith, Culture and Computer Technology. Intervarsity Press. https://www.christianbook.com/shaping-digital-faith-culture-computer-technology/derek-schuurman/9780830827138/pd/827138

Diaz-Sprague, R., & Sprague, A. P. (2024). Embedding Moral Reasoning and Teamwork Training in Computer Science and Electrical Engineering. The International Library of Ethics, Law and Technology, 67–77. https://doi.org/10.1007/978-3-031-51560-6_5

Anderson, R. E. (2018). ACM code of ethics and professional conduct. Communications of the ACM, 35(5), 94–99. https://doi.org/10.1145/129875.129885

Groeneveld, W., Vennekens, J., & Aerts, K. (2019). Software Engineering Education Beyond the Technical: A Systematic Literature Review. https://doi.org/10.48550/arxiv.1910.09865